7 Different Types of White Box testing techniques | White box Testing Tools

Whitebox testing is one of the popular kind, which has attracted a lot of users because of the functionality. There are Different Types of White Box testing techniques available to use. Hence, finding the right kind of activity helps you in saving a whole lot of time every day.

It is a known fact that every web application and software requires testing activity. There are different kinds of testing and it is chosen based on the actual requirements.

A proper testing activity before launching helps you overcome any kinds of errors. Errors are classified into major and minor depending on the web application. An effective process of condition coverage allows testers to enhance quality.

What is White Box testing?

It is important for every tester to know and understand the process before starting to enjoy quality results. Hence, testing is generally practiced depending on the necessity. It is necessary to have a set of independent paths while testing because it helps in organizing the process.

A proper white box testing definition helps you understand the objective. White box testing is one of the popular activities performed by testers because of various reasons. It allows professionals to test case the design, internal structure, and coding. Hence, an organized testing activity gives a wide range of information before the launch.

Why use white box testing in software testing?

Every software producer prefers to have a glitch or error-free software because of obvious reasons. The best part of white-box testing is that the tester will have access to view the code in the software. When there is enough access to see the raw script, it is easier for the tester to find out errors in a quick time.

White box testing is one of the mandatory steps followed across the world. It concentrates on authenticating the input and output flow from the application. Hence, it helps in improving the functionalities. Functionalities include design, security, and usability from time to time.

What do you verify in White Box Testing?

It is important to understand the contents of White box testing to determine the value of it. White box testing is considered as the first step of testing activity. This gives you most of the minor errors without compromising on the quality.

A perfect example of white box testing explains you the importance of verification. White Box testing is the first step of the testing process. Hence, it is generally performed by developers before submitting the project. White Box testing is also known as Clear box testing, structural testing, code-based testing, open box testing and so on. Most of the traditional testers prefer calling as transparent box testing or glass box testing.

The following parameters are generally verified in white box testing

- Output and input flow

- Security elements

- Usability

- Design and so on.

When to perform white box testing

The white box is largely based on checking the internal functionality of the application. Hence, it sticks around elements related to internal testing.

White box testing examples helps you perform white box testing. The white box testing methodology is highly used in web applications because it allows them to add several functions. Most of the functions are pre-defined because it helps them to suit the requirements.

White Box testing is commonly performed in the initial stage of the testing or in the final stage of the development. Most of the times, developers complete the steps because it helps testers to save a lot of time.

7 Different types of white-box testing

- Unit Testing

- Static Analysis

- Dynamic Analysis

- Statement Coverage

- Branch testing Coverage

- Security Testing

- Mutation Testing

Unit Testing

Unit Testing is one of the basic steps, which is performed in the early stages. Most of the testers prefer performing to check if a specific unit of code is functional or not. Unit Testing is one of the common steps performed for every activity because it helps in removing basic and simple errors.

Static Analysis

As the term says, the step involves testing some of the static elements in the code. The step is conducted to figure out any of the possible defects or errors in the application code.

The static analysis is an important step because it helps in filtering simple errors in the initial stage of the process.

Dynamic Analysis

Dynamic Analysis is the further step of static analysis in general path testing. Most of the people prefer performing both static and dynamic at the same time.

The dynamic analysis helps in analyzing and executing the source code depending on the requirements. The final stage of the step helps in analyzing the output without affecting the process.

Statement Coverage

Statement coverage is one of the pivotal steps involved in the testing process. It offers a whole lot of advantages in terms of execution from time to time.

The process takes place to check whether all the functionalities are working or not. Most of the testers use the step because it is designed to execute all the functions atleast once. As the process starts, we will be able to figure out the possible errors in the web application.

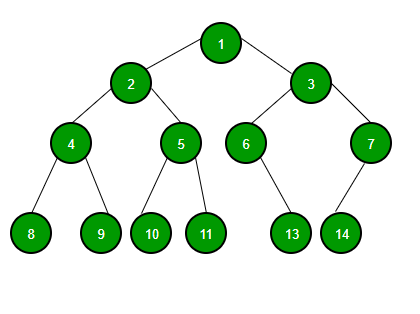

Branch Testing Coverage

The modern-day software and web applications are not coded in a continuous mode because of various reasons. It is necessary to branch out at some point in time because it helps in segregating effectively.

Branch coverage testing gives a wide room for testers to find quick results. It helps in verifying all the possible branches in terms of lines of code. The step offers better access to find and rectify any kind of abnormal behavior in the application easily.

Security Testing

It is a known fact that security is one of the primary protocol, which needs to be in place all the time. Most of the companies prefer having a regular security testing activity because of obvious reasons. It is essential to have a process in place to protect the application or software automatically.

Security testing is more like a process because it comes with a lot of internal steps to complete. It verifies and rectifies any kind of unauthorized access to the system. The process helps in avoiding any kind of breach because of hacking or cracking practices.

Security testing requires a set of techniques, which deal with a sophisticated testing environment.

Mutation Testing

The last step in the process and requires a lot of time to complete effectively. Mutation testing is generally conducted to re-check any kind of bugs in the system.

The step is carried out to ensure using the right strategy because of various reasons. It gives enough information about the strategy or a code to enhance the system from time to time.